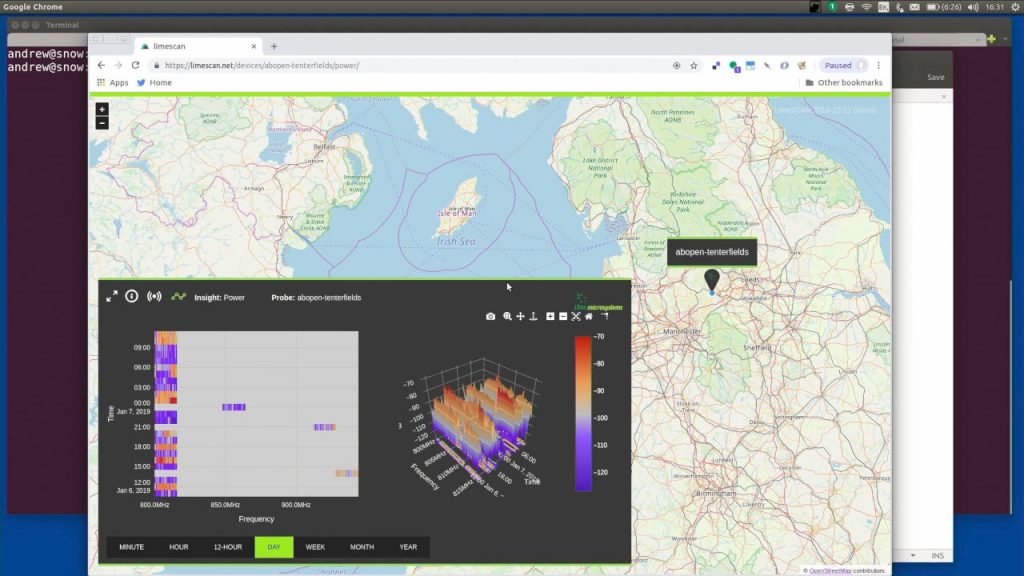

The LimeNET Micro campaign has posted a demonstration of an alpha-status project dubbed LimeSCAN, taking advantage of the ability to run software defined radio and general purpose processing tasks on a single standalone unit to scan for radio spectrum usage data and share it via a private Ethereum blockchain.

“The intention is for LimeSCAN to become a public resource for crowdsourced radio spectrum information, where anyone is free to operate a probe that uploads data, and to make use of the available data — both via the web interface and an open data API,” explains Andrew Back. “Which raises a number of questions, including, how can we verify data and be certain that it came from a given source?

“A popular mechanism for verifying files and data is to create a cryptographic signature or ‘digest’ using a hash function such as SHA256. However, then you need to be sure that the digest you use to validate your data came from a trusted source. One way of doing this in a decentralised manner is to store it on a blockchain, where you can be certain that a given account was responsible for the transaction which recorded that digest, and that it will also be immutable.”

The demonstration video marks the first time the revised LimeNET Micro v2 hardware, which breaks out more of the functionality of the Raspberry Pi Compute Module 3 and vastly improves the bandwidth available from the LMS7002M transceiver, has been seen in public. The LimeSCAN software, meanwhile, is currently in closed alpha, though its read-only web interface is publicly accessible.

More information is available in the Crowd Supply campaign update.

Over on the LimeSDR Mini campaign page, meanwhile, a video showcasing the integration of the compact software defined radio with the Raspberry Pi powered PiTop modular laptop has been published.

“The PiTop is a rather cool modular laptop that you build yourself and which is based around the Raspberry Pi platform,” Andrew writes. “Eminently hackable, we thought it would be fun to see if we could integrate a LimeSDR Mini for software-defined radio development on the move.

“In this short video we show how we achieved this with the aid of just a few custom laser cut parts and give a quick demo using the excellent SDRangel software to view 2.4 GHz spectrum.”

The video is available now over on the LimeSDR Mini Crowd Supply page.

Lucas Teske has released a new alpha of the OpenSatelliteProject’s SatHelperApp software, which now includes support for ingesting GOES files and built-in support for the LimeSDR family.

“New application now supports GOES Ingestion (output files) and has embedded routines for using AirSpy and LimeSDR,” Lucas writes on Twitter of Alpha 0.2 of the SatHelperApp, a key part of the OpenSatelliteProject. “No external dependencies (besides libusb and normal Linux stuff) needed! Windows app should be similar.”

In addition to the new SatHelperApp release, Lucas has also been working on documenting the Limedrv wrapper for accessing the LimeSDR from GoLang. “Soon I will make an article about using Limedrv + GoLang + LimeSDR + SegDSP for doing a simple FM Radio program,” he promises.

Lucas’ Limedrv documentation is available on the Myriad-RF wiki, while SatHelperApp Alpha 0.2 can be found on the OpenSatelliteProject GitHub repository.

Signals Everywhere has published a video demonstrating how to use the DATV Express package to transmit DVB-S video via a LimeSDR under Windows, to be followed by a written set of instructions in the near future.

Published on the official YouTube channel, the video walks through the use of DATV Express – which has supported the LimeSDR and LimeSDR Mini since version 1.25 – to transmit video as DVB-S using a connected webcam as a live video source. While the demonstration uses the H.262 MPEG-2, Signals Everywhere indicates that it is possible to use a more efficient codec including H.264 MPEG-4.

The video is available now on YouTube, with a full write-up to follow.

Talks from the GNU Radio Conference 2018 (GRCon18) have been uploaded to YouTube, covering subjects from signal analysis and the management of RF spurs to open source radio telescopes and machine learning for sensing and communications systems.

The eight annual GNU Radio Conference, GRCon18 was held late last year in Henderson, Nevada, attracting presenters discussing a range of topics related to the open source project. All videos have now been made available via the GNU Radio YouTube channel, with highlights including a talk on open-source radio telescopes, a guide on why signals might not match textbook examples, accelerating software defined radios, a keynote from the SatNOGS project, and a technical update on GNU Radio itself.

For a full list of the videos, which include links to slide decks and other resources where available, head to the GNU Radio YouTube channel.

Dirk Gorissen has written of a project last year to track orangutans in Borneo, in partnership with International Animal Rescue, using an SDR-equipped drone system.

“Compared to my last visit, the v2.0 system was a lot better integrated. There was a dedicated payload box, cleaner wiring and a much larger and more capable drone with a Pixhawk2/APM fight controller,” Dirk writes. “On the radio side I was still using a square loop but switched to an AirSpy Mini & pre-amp combo instead of the RTL-SDR I was using originally.

“The signal processing logic was completely re-written using GNU Radio (with helpful guidance from the community) and now featured three approaches for deciding if an implant pulse was detected or not: peak detection in the power spectrum, cross correlation with the known template in the time domain, and using a phase locked loop (PLL). The GNU Radio code was wrapped in Python and a set of heuristics was used to filter the output of each of the approaches and generate a series of plots to report back to the user. This was done through a react.js web application (which also was largely rewritten) served through Flask and the drone’s own Wi-Fi hotspot. All of this running on an x86 UDoo board instead of the Odroid UX4 I had used previously.”

Dirk’s full project write-up, along with a link to the first incarnation of the system, can be found on his personal website.

The NFC Forum has announced a new release of its Near Field Communication (NFC) standard which allows for NFC-enabled devices to be charged at a rate of up to one watt using the same antenna as for communication.

“The NFC Forum’s Wireless Charging Candidate Technical Specification allows for wireless charging of small battery-powered devices like those found in many IoT devices,” explains Paula Hunter, executive director of the NFC Forum. “Our approach can help avoid the need for a separate wireless charging unit for small devices if the device includes an NFC communication interface. For example, a Bluetooth headset which includes NFC technology for pairing could also use the NFC interface for wireless charging. In this case, the NFC antenna is used to exchange the pairing information and to transfer power.”

The new specification uses the 13.56 MHz base frequency and uses the NFC communication link to control the transfer of power, either at a static mode using standard field strength and a constant power level or negotiated modes which switch to a higher radio frequency field and support 250, 500, 750, and 1,000 milliwatts.

The candidate specification, which is open to feedback, is available on the NFC Forum website.

Hackaday has highlighted an Android application, compatible with Android 8.0 and above, which uses augmented reality (AR) to visualise radio frequency signal strengths in 3D space.

“Built to aid in the repositioning of his router in the post-holiday cleanup, [Ken Kawamoto’s] app uses the Android ARCore framework to figure out where in the house the phone is and overlays a colour-coded sphere representing sensor data onto the current camera image,” explains Hackaday’s Dan Maloney. “The spheres persist in 3D space, leaving a trail of virtual breadcrumbs that map out the sensor data as you warwalk the house. The app also lets you map Bluetooth and LTE coverage, but RF isn’t its only input: if your phone is properly equipped, magnetic fields and barometric pressure can also be AR mapped.”

The app is available for free download from the Google Play Store, but requires a handset with ARCore support – meaning Android 8.0 or above.

SDR Makerspace has published a piece on the gr-leo project, an open-source tool designed to simulate the telecommunications channel between a satellite and a ground station.

“Telecommunication systems are affected by a plethora of signal impairments imposed by the wireless channel. The development, debugging and evaluation of such systems should be performed under realistic channel conditions, that are difficult to be simulated on the lab. This prerequisite becomes even more crucial in the case of satellite missions. For that reason, different telecommunication channel simulation projects have made their appearance over the years. Unfortunately, most of these alternatives are proprietary and provided under expensive licences,” explains Kostis Triantafyllakis. “On the other side lies GNU Radio, the leader open source solution for signal processing and software defined radios development. GNU Radio already provides a vast variety of signal processing and channel estimation blocks, that however cannot be used thoroughly for the purposes of a space telecommunication channel.

“The gr-leo project aspires to fill this gap with the implementation of a GNU Radio module that simulates the communication of an Earth-Satellite system and a variety of impairments that may be induced from the space channel. For example, frequency shift due to the Doppler effect, the variable free-space path loss due to the satellite’s trajectory or the atmospheric effects (i.e atmospheric gases and precipitation) along the path, are proven to pose significant degradation on the quality of the communication.”

The gr-leo source code is available on its GitLab repository under the GNU General Public Licence 3.0, while documentation is available on the Libre Space Foundation wiki.

Finally, Hackaday has highlighted a fascinating video which combines cutting-edge technology with classic ham radio: connecting Google’s voice-activated Assistant system to the ham radio network.

“This prototype is based on using a fresh IRLP [Internet Radio Linking Project] hardware setup with a simplex VHF radio attached,” William Franzin explains of his creation. “I chose this as the starting point because we have a Linux machine and all the radio/sound working. Once we have a Linux & radio setup, I created a Google developer account and learned how to build Assistant products (smart speakers) but instead of a speaker integrated it with the IRLP platform. When a radio user presses A[ssistant] or 0[perator] it calls the Assistant and then listens for voice commands. Google responds and talks to IFTTT for custom questions/responses.”

Following the use of DTMF tones to activate the system, William expanded it to use a custom wake word. “This can be done with any Linux platform using any audio codec that can be connected to a radio system,” he explains. “I’ll put together another video soon covering hardware platforms. You’ll need some basic Python skills and hardware experience to build your own version of this setup.”

Videos of William’s first DTMF-activated version and later voice-activated version are available on his YouTube channel.